| << Newer | Article #208 | Older >> |

How Slow Can You Go?

I get tired of reading people just blindly saying that MAME gets slower with each release. A lot of people attribute it to dumb things like adding more games, or making some completely benign change in the core, or supporting Mahjong games, or other silly nonsense.

Yes, you can look at the history of MAME over time and see that the system is overall slower than it used to be. However, the performance is also quite stable over long stretches of time. What you really see is a few abrupt performance drops here and there. Generally this is due to jettisoning features or hacks that were optimized for lower-end systems, in exchange for simpler, clearer code.

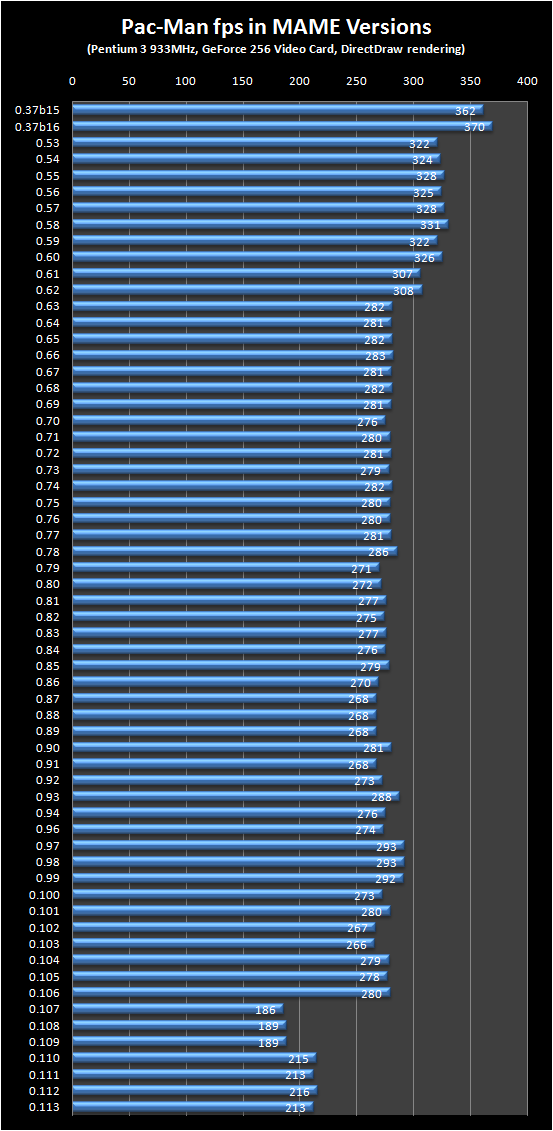

In an effort to get a handle on MAME's performance curve over the last few years, I've done some benchmarking of the Pac-Man driver over all of the native Windows ports of MAME since I did the first one back in version 0.37b15. The main reason I did not include DOS versions was because they don't support the -nothrottle and -ftr/-str command line options that make benchmarking possible.

I picked Pac-Man because the driver itself really hasn't changed all that much over the years. The chart at the right shows the results of benchmarking on a year 2000-era computer (Dell Dimension 4100, 933MHz Pentium III) with a year 2000-era graphics card (nVidia GeForce 256) running Windows XP. If you look at the chart, you can see periods of performance stability followed by drops here and there due to specific changes to the MAME core that optimized it more toward more modern systems and simpler code.

The first drop came with the 0.53 release (about at 10% speed hit). That was the first release where we dropped support for 8-bit palette modes. This meant that all internal bitmaps for rendering were 16 bits or greater. Although Pac-Man doesn't need 16-bit rendering, the simplification of removing tons of rendering code associated with supporting both types of rendering made this a good tradeoff.

After that performance was roughly stable until the 0.61 release, where it took another 6% hit. This was the release where we removed dirty bitmap support. Previously, drivers could optimize their rendering by telling the OS-dependent code which 8x8 areas of the screen had changed, and which were static. As you can see from Pac-Man, much of the screen is static most of the time, so this optimization helped. Again, though, it added a lot of complexity to the code and obscured a lot of the real behaviors.

You'll note another 8% drop in the 0.63 release. This one I haven't yet investigated enough to understand; I'll update this post once I figure out what happened here. One change that happened is that we upgraded to a newer GCC compiler; it's possible that in the case of Pac-Man, this impacted performance.

From that point on, right up until 0.106, Pac-Man performance was pretty flat. It varied a bit release to release, but really was quite steady until the big video rewrite. At that point, performance took a pretty significant hit (about 30%). The primary reason for this was that the Windows code was changed to always choose a 32-bit video mode if available, rather than supporting 16-bit video modes. The reasoning behind this decision is that palette values are computed to full 24-bit resolution internally, and even though Pac-Man doesn't use more than 32 colors itself, those 32 colors aren't perfectly represented by a 16-bit video mode.

Interestingly, things got about 15% better in 0.110. Again, I haven't yet done the analysis to figure out why.

So, what to take away from this? It's more really just an interesting set of datapoints I've wanted to see for a while. Certainly, lower-end games like Pac-Man have suffered the most from the various core changes over the years. However, in addition to code cleanup, another reason for this is that we have shifted the "optimization point" (i.e., the games we target to run most efficiently) away from the lower-end games and more toward the mid-to-late 80's games. This means that we generally take for granted that lower-end games will run fine on today's machines (or even 7-year-old machines like my Dell), but the later games are the ones that need more attention.

One follow-up test I'd like to do is to try the same analysis with a driver that is more in the middle, perhaps something like Rastan, or a game that required 32-bit color support back in version 0.37b15. These games won't see any hit from the removal of dirty support, 8-bit color modes, or even 32-bit color modes. I think the result would be a much more consistent graph.